Last year, I eagerly anticipated the release Google Drive. I had complained a lot about my experiences with other synchronization software, and fully expected Google to knock this one out of the park. It's an application that should really emphasize Google's strengths: systems software, storage, scaling distributed systems.

In Spring 2012, I made the leap and moved all my personal cloud storage over to Google, but I ran into too many technical problems and gave up. (Some of these problems I'll detail below.) Now, a year later, I wanted to check in again and see how things are improving.

I'm afraid I have to report that there are still these major deal breakers for me, and perhaps for other "power users":

- Scalability isn't there. For example, if you try the simple benchmark of adding a folder with thousands of very small files, you'll see that maximum throughput is a few files per second.

- Getting stuck ("Unable to sync") seems common.

- Symlinks are still ignored.

Of course, these services all work great for storing small numbers of medium sized files. Maybe there's no desire or need to support scaling and more intensive use? Yet, I think even non-techie users may end up with large numbers of small files even if they don't created them directly (e.g. in my Aperture library). For myself, I ultimately want something closer to a distributed file system. For example, I like to edit files within a git checkout locally on my laptop and have them synced to a server where I run the code. This requires three things:

- Cross platform -- Linux/Mac in my case.

- Low latency -- file edits should appear quickly on the other side.

- Equally good treatment of large numbers of small files and small numbers of large files.

The end result is that I still use the same solution I did ten years ago. I run "unison -repeat 2" for linking working copies on different machines. The only thing missing is convenient file-system watching via inotify (i.e. OS-driven notification of changes rather than scanning). This is the killer feature that many of the newer cloud offerings have compared to unison, and it is the key to low-latency, as well as the always-on usage model Dropbox-style systems employ. Unison has rudimentary support for integrating with a file-system watcher and I've sporadically had that functionality working, but it was fragile and hard to set up last time I tried it.

A simple benchmark

One thing that was frustrating when Google Drive was released was that there were a plethora of articles and blog posts talking about strategic positioning, competition between the big cloud-storage players in pricing and features, etc; and yet I couldn't find technical reviews talking about correctness features like conflict resolution or objective performance (i.e. benchmarks, not "seems snappy"). As a community, do we not have good sites to review software like this, the same way new graphics cards or digital cameras get benchmarked?

This time around I decided to do a simple benchmark myself. I grabbed a small bioinformatics dataset---3255 small text files, adding up to 15 MB---and dropped it into cloud storage. (Bioinformatics software tends to create large numbers of files, in this case per-gene files for a single species.) How long do different solutions take to upload this directory?

As a baseline it took 1.4 seconds to copy it on my laptop local disk-drive (cold cache). Rsync over the LAN took a similar 1.6 seconds. Rsync from my home to a remote machine---through ~500 miles of internet---about 12.5 seconds. (By contrast, "scp" took 264 seconds, providing another good example of good vs. bad small file handling.)

This is a test in typical (uncontrolled) conditions with lots of applications open on my Macbook Pro, transferring to either the cloud or a mediocre Linux work-station over the WAN. The total time to upload this folder varied wildly between the softwares. Most of the data-points above were taken by hand with a stop watch, but Google Drive would run into an "Unable to Sync" error and require restarting, so I couldn't time it in one go. I had to estimate the rate at which it was uploading files and extrapolate, which gives an approximate time of an hour (3600 seconds) to complete the sync. At the other end of the spectrum, unison can startup, scan the directories, and do the transfer in 28.6 seconds.

Having heard of them on a recent podcast, I also added the new BitTorrent Sync and AeroFS into the mix as well. These provide a decentralized, peer-to-peer alternative to Google Drive and Dropbox. BitTorrent Sync took 65 seconds on the benchmark, which was comparatively good. Alas BitTorrent Sync also had a raw latency of 12-18 seconds between changing a single file and that file appearing on another machine (even on the LAN). AeroFS on the other hand, another decentralized system, had the best latency I'd seen for single edits (a couple seconds), but much worse throughput for the 3255 file benchmark. Dropbox achieves pretty good single-edit latency (5 seconds in previous tests), but only so-so throughput on the benchmark.

The Achilles' heel of each syncing solution

Here's a summary of the problems with each file synchronization solution I've tried.

- DropBox -- follows symlinks, which is crazy.

- BitTorrent Sync -- bad latency

- AeroFS -- bad throughput

- SpiderOak -- often fails to detect changes on Linux/NFS and allows inconsistent states for unbounded periods of time.

- Wuala -- slow, expensive file system scans

- Unison -- not always on, difficult to achieve FS monitoring / inotify support

- Others -- no Linux client (includes Amazon Cloud Drive, Syncplicity, Box Sync, MS Skydrive, Sugar Sync)

Finally, there are other systems I've heard mentioned but haven't tried yet:

- SymForm and other p2p systems

- Ubuntu One -- just found that this has a cross platform client

- Cloud Me -- also a Linux Client

- Likewise Team Drive

- Many more on Wikipedia

Addendum: Screenshots from first round of Google Drive migration

Here's a chronological account of the problems I had when I first migrated to Google Drive. Some of these may now be out of date.

(1) Many "Can't sync" errors, including many "unknown issue"s which would then work on fine retry. Unfortunately, on the Mac these [hundreds of] errors would be displayed in a small non-resizable window.

(4) A minor GUI issue is that the green check mark would appear next to symlinks, which is a bit misleading, since they are actually ignored by the sync client.

(10) Finally, I got a bunch of errors, which would culminate in me needing to disconnect the account. But, most surprisingly, re-associating the account required deleting everything, and re-downloading the entire ~30GB Google Drive directory.

(1) Many "Can't sync" errors, including many "unknown issue"s which would then work on fine retry. Unfortunately, on the Mac these [hundreds of] errors would be displayed in a small non-resizable window.

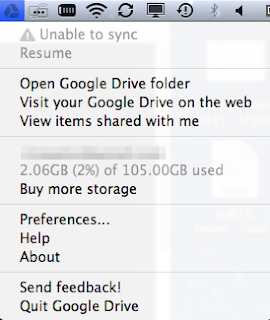

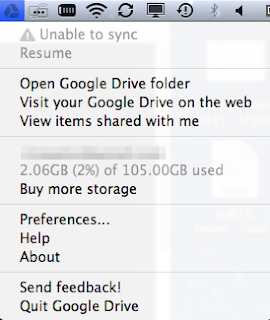

(2) When it would get into an "Unable to sync" state the Resume button would be greyed out. I would exit / restart instead.

(3) Typical overnight progress on small files would amount to 8000 files over 6 hrs, and would result in >700 mysteriously failed files. (Most would work on retry.)

(4) A minor GUI issue is that the green check mark would appear next to symlinks, which is a bit misleading, since they are actually ignored by the sync client.

(5) I would continue to see errors regarding syncing a file that was completely GONE from the local file system.

(6) Another minor GUI issue was that the Finder icons would still include blue "still syncing" icons even while the status bar reported "Sync complete".

(7) The client would often use 100-130% CPU, sometimes even when it is in the "Sync complete" state.

(8) I observed a 9GB discrepancy between what the desktop app and website reported my current drive usage to be.

(9) I had spurious file duplication event that created identical copies of 150 files and directories. The files were bitwise identical; there was not an actual conflict.

(10) Finally, I got a bunch of errors, which would culminate in me needing to disconnect the account. But, most surprisingly, re-associating the account required deleting everything, and re-downloading the entire ~30GB Google Drive directory.

I rather prefer the mass notifications as it tells me that whats new happened!

ReplyDeleteThanks for this write-up!

ReplyDeleteI was also hoping that Google would know it out of the park, but was similarly disappointed already with its basic lack of robustness: http://cpbotha.net/2012/04/27/google-drive-not-reliable-yet-but-potential/

Recently I moved all my data away from my Dropbox Pro account due to privacy concerns ( http://cpbotha.net/2013/09/15/dear-usa-my-data-has-left-your-building/ ) and am now using bittorrent sync between my various workstations, laptops and a synology NAS. My largest sync repo has over 120k files of varying sizes (about 50G).

I really like bittorrent's peer-to-peer syncing (my NAS is not beefy enough to act as the central node for everything), but even with version 1.1.70 btsync often gets stuck on some files, or, is some really irritating cases, manages to get my git repo object store and working dir out of sync (not 100% sure, but it almost looks like it somehow reverts to an older version of the index).

For me a very important use case is the one where I get up from my workstation, and continue working on the same code on my laptop. Dropbox handled this flawlessly for years, but btsync drops the ball every now and then.

I'm hoping that btsync 1.2 fixes more of these bugs, otherwise I'm also going to have to continue searching for alternatives. Before dropbox I also used to use unison, but indeed the manual sync was quite inconvenient. Still, it was rock solid.

Nice post Ryan. I'm struggling with the same requirements as you are (running OS X) though.

ReplyDeleteTorn between Dropbox and BTsync at the moment.

Worst thing about Google Drive is asking you if you want to move files to the Trash each time and requiring you to confirm yes or no on its stupid pop up! so annoying!

ReplyDeletewow - thank god I'm not alone here - I've been going nuts the last few days reevaluating Drive because I thought putting it down for a year or two might do the trick. yeah right... i had to resort to creating a daemon just to keep the damn app alive esp during large syncs as mention. now it's stuck on "Syncing 1 of 1" and for the life of me can't figure out which file it is as all the files have checkmarks and the 2 enclosing folders have blue sync icons.

ReplyDeleteHow fast was your computer? Either AeroFS got *much* faster, or your CPU is slower. Apparently, syncing small files is CPU bound, but the performance I saw was ~100 times better on Gigabit Ethernet — so I guess the overhead would be acceptable over the Internet.

ReplyDeleteMy only gripe is that symlinks aren't transferred, so I'm gonna try this gist to work around that: https://gist.github.com/kogir/5417236. (Alternatively, since AeroFS treats timestamps correctly, one can also run rsync on the results).

I've tried AeroFS 0.8.8 here (very unscientifically) to move 6G between my computers, including a few source code trees, for a total of 38184 files, over Gigabit Ethernet (over Thunderbolt). This was between an iMac 2013 with a 2.5GHz Intel i5 CPU (quad core, Fusion Drive) and a rMBP 2013 with a 2.6GHz Intel i5 CPU (dual core + multithreading, a pure SSD), both with OS X 10.9.2.

Since that transfer felt quite fast, I've done some unscientific benchmarking with another source tree. Size:

$ du -sh Sorgenti/Delite/

751M Sorgenti/Delite/

$ find Sorgenti/Delite/|wc -l

36727

$ find Sorgenti/Delite/ -type f|wc -l

34311

This data was transferred in ~4 minutes, less than half the time you report for ~10x more files and ~70x more data. The used bandwidth oscillated around 1MB/s and 10MB/s, while for the previous transfer (which also included huge files) I've seen even 100/150 MB/s (close to Gigabit Ethernet bandwidth).

The process was absolutely CPU-bound (at least on the on the receiving computer), with aerofsd using 100% CPU.

Since both computers are mine and realistically I'll be using one at a time, right now AeroFS seems the best approach. But I'll have to see how it behaves without a direct Gigabit Ethernet connection.

Very nice post and you're spot on. I keep waiting for someone to build an opensource cross-platform syncing tool using OS-monitoring APIs and backing up to cloud APIs...

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteActually, it seems Unison now has fswatch support baked in, so that's nice!

ReplyDeletehttp://www.seas.upenn.edu/~bcpierce/unison//download/releases/beta/unison-manual.html#news

Maybe take a look at https://github.com/syncthing/syncthing too.

ReplyDeleteOpensource, and getting a lot of attention

I'm considering switching from Unison, because I want something more robust in terms of "always-on" and auto-resumption.

Hi guys,

ReplyDeleteThank you so much for this wonderful article really!

If someone want to know more about the Move Files Between Cloud Services I think this is the right place for you!